Voici donc les graphiques :

J’ai fait un petit script pour envoyer les logs vers Grafana :

mysql -u root -e "CREATE DATABASE TEAMS;"

mysql -u root -e "USE TEAMS;DROP TABLE TEAMS_AUDIT;"

mysql -u root -e "USE TEAMS; CREATE TABLE TEAMS_AUDIT (DATE datetime, duration float, vdiMode int, eventpdclevel int);"

echo "Version 1.0";

mysql -u root -e "USE TEAMS; DELETE FROM TEAMS_AUDIT";

grep "desktop_foreground_duration" ~/.config/Microsoft/Microsoft\ Teams/logs.txt ~/.config/Microsoft/Microsoft\ Teams/old_logs_* | sed 's/:/ /g' | awk '{print $6 "-" $4 "-" $5 " " $7 ":" $8 ":" $9 " " $20 " " $24 " " $26}' | sed 's/,/ /g' | sed 's/Jun/06/g' | sed 's/May/05/g' > /tmp/audit_teams.txt

while read line

do

DATE=$(echo $line | awk '{print $1 " " $2}');

DURATION=$(echo $line | awk '{print $3}');

VDI=$(echo $line | awk '{print $4}');

LEVEL=$(echo $line | awk '{print $5}');

SQL="USE TEAMS; INSERT INTO TEAMS_AUDIT (DATE, duration, vdiMode, eventpdclevel) VALUES ('$DATE',$DURATION,$VDI,$LEVEL);"

#echo $SQL

mysql -u root -e "$SQL"

done < /tmp/audit_teams.txt

echo "Done"

Ensuite sur Grafana il suffit de faire :

– Pour avoir le temps passé (en seconde) par jour :

SELECT

UNIX_TIMESTAMP(date) as time_sec,

SUM(duration) as value

FROM TEAMS_AUDIT

WHERE

$__timeFilter(DATE)

GROUP BY day(date),month(date),year(date)

ORDER BY DATE

-Pour avoir le temps passé (en jours) par jour :

SELECT UNIX_TIMESTAMP(date) as time_sec, sum(duration)/60/60 as value FROM TEAMS_AUDIT WHERE $__timeFilter(DATE) GROUP BY day(date),month(date),year(date) ORDER BY DATE

– Pour avoir le nombre d’activation de la fenetre par jour:

SELECT

UNIX_TIMESTAMP(date) as time_sec,

count(duration) as value

FROM TEAMS_AUDIT

WHERE

$__timeFilter(DATE)

GROUP BY day(date),month(date),year(date)

ORDER BY DATE

Je viens de faire un nouveau programme en Python afin de mettre les données de ENEDIS sur MariaDB & Python.

Pour avoir les données de ENEDIS il faut aller sur https://mon-compte-particulier.enedis.fr/home-connectee/ et se faire un compte. Puis relier ce compte à la facture EDF … Je vais pas vous mentir c’est un peu de parcours du combattant. J’ai du faire appel à plusieurs fois au support afin que le lien puisse se faire. Misère.

Pour mieux comprendre les donnéesil faut lire : https://espace-client-particuliers.enedis.fr/documents/18080/5456906/pdf-producteurSuiviProduction/ebd9e049-5fd1-4769-9f87-b63e8c4b051c

Pour mieux comprendre les donnéesil faut lire : https://espace-client-particuliers.enedis.fr/documents/18080/5456906/pdf-producteurSuiviProduction/ebd9e049-5fd1-4769-9f87-b63e8c4b051c

EAS F1 à EAS F10 : le compteur Linky permet d’avoir jusqu’à 10 index de soutirage (à chaque index correspond un poste tarifaire de l’offre de votre Fournisseur)

EAS D1 à EAS D4 : 4 index de soutirage (calendrier Distributeur pour facturation de l’acheminement)

EAS T: Index Totalisateur du soutirage. Cet index sert à vérifier la cohérence entre la consommation affichée de la grille fournisseur et la consommation de la grille distributeur

J’ai fait cela sous Ubuntu mais Python fonctionne très bien sous Windows, MacOS, …

Il faut donc :

Un petit rappel sur l’ajout de database et user sur MariaDB/MySQL :

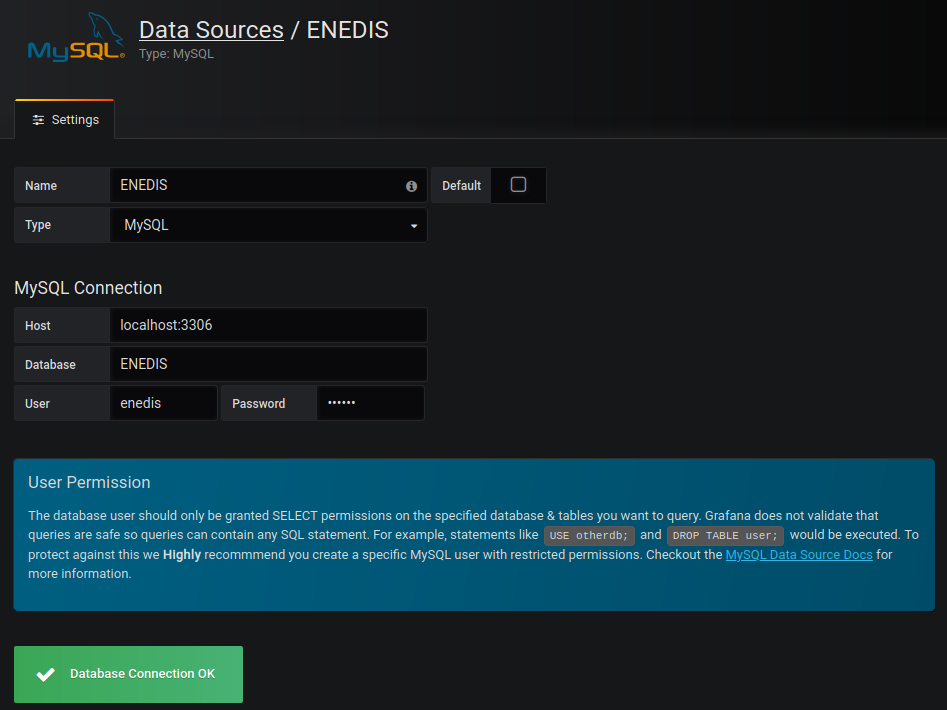

$ sudo mysql -u root [sudo] password for XXXX: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 273026 Server version: 10.1.44-MariaDB-0ubuntu0.18.04.1 Ubuntu 18.04 Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> create database ENEDIS; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> CREATE USER 'enedis'@'localhost' IDENTIFIED BY 'enedis'; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON ENEDIS.* TO 'enedis'@'localhost'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> \quit Bye

Ensuite il faut faire le lien avec Grafana :

Voici le programme en Python ( La version 1 , que je vais améliorer par la suite ). A noter que vous devez mettre le path complet de votre fichier à la place de Enedis_Conso_Jour_XXXXX-XXXX_YYYYYY.csv.

Voici le programme en Python ( La version 1 , que je vais améliorer par la suite ). A noter que vous devez mettre le path complet de votre fichier à la place de Enedis_Conso_Jour_XXXXX-XXXX_YYYYYY.csv.

Les sources sont disponibles ici : https://github.com/farias06/Grafana/blob/master/ENEDIS_CSV_insert.py

#! /usr/bin/env python3 # -*-coding:Latin-1 -* # @author <@cyber-neurones.org> # Version 1 import csv from datetime import datetime import mysql.connector import re from mysql.connector import errorcode from mysql.connector import (connection) #import numpy as np def days_between(d1, d2): d1 = datetime.strptime(d1, "%Y-%m-%d %H:%M:%S") d2 = datetime.strptime(d2, "%Y-%m-%d %H:%M:%S") return abs((d2 - d1).days) def clean_tab(d): if d != "": return int(d); else: return 0 cnx = connection.MySQLConnection(user='enedis', password='enedis', host='127.0.0.1', database='ENEDIS') cursor = cnx.cursor(); now = datetime.now().date(); #cursor.execute("DROP TABLE COMPTEUR;"); #cursor.execute("CREATE TABLE COMPTEUR (DATE datetime,TYPE_RELEVE varchar(50),EAS_F1 int, EAS_F2 int, EAS_F3 int , EAS_F4 int, EAS_F5 int, EAS_F6 int , EAS_F7 int, EAS_F8 int, EAS_F9 int, EAS_F10 int, EAS_D1 int, EAS_D2 int, EAS_D3 int,EAS_D4 int, EAS_T int );"); cursor.execute("DELETE FROM COMPTEUR"); cnx.commit(); MyType_Previous = "None"; MyEAS_F1_Previous = 0; MyEAS_F1 = 0 Diff_EAS_T_int = 0 with open('Enedis_Conso_Jour_XXXXX-XXXX_YYYYYY.csv', 'r') as csvfile: reader = csv.reader(csvfile, delimiter=';') for row in reader: Nb = len(row); #row.replace(np.nan, 0) #print ("Nb:"+str(Nb)); if (Nb == 17): MyDate=row[0].replace("+02:00", "") MyDate=MyDate.replace("T", " ") MyDate=MyDate.replace("+01:00", "") MyType=row[1].replace("'", " ") if (MyType == "Arrêté quotidien"): MyEAS_F1=clean_tab(row[2]) MyEAS_F2=clean_tab(row[3]) MyEAS_F3=clean_tab(row[4]) MyEAS_F4=clean_tab(row[5]) MyEAS_F5=clean_tab(row[6]) MyEAS_F6=clean_tab(row[7]) MyEAS_F7=clean_tab(row[8]) MyEAS_F8=clean_tab(row[9]) MyEAS_F9=clean_tab(row[10]) MyEAS_F10=clean_tab(row[11]) MyEAS_D1=clean_tab(row[12]) MyEAS_D2=clean_tab(row[13]) MyEAS_D3=clean_tab(row[14]) MyEAS_D4=clean_tab(row[15]) MyEAS_T=clean_tab(row[16]) if (MyType_Previous == MyType): #print(MyType_Previous+"/"+MyType); Day=days_between(MyDate,MyDate_Previous); #print("Diff in days"+str(Day)); else: Day = 0 if (Day == 1): Diff_EAS_F1 = str(MyEAS_F1-MyEAS_F1_Previous); Diff_EAS_F2 = str(MyEAS_F2-MyEAS_F2_Previous); Diff_EAS_F3 = str(MyEAS_F3-MyEAS_F3_Previous); Diff_EAS_F4 = str(MyEAS_F4-MyEAS_F4_Previous); Diff_EAS_F5 = str(MyEAS_F5-MyEAS_F5_Previous); Diff_EAS_F6 = str(MyEAS_F6-MyEAS_F6_Previous); Diff_EAS_F7 = str(MyEAS_F7-MyEAS_F7_Previous); Diff_EAS_F8 = str(MyEAS_F8-MyEAS_F8_Previous); Diff_EAS_F9 = str(MyEAS_F9-MyEAS_F9_Previous); Diff_EAS_F10 = str(MyEAS_F10-MyEAS_F10_Previous); Diff_EAS_D1 = str(MyEAS_D1-MyEAS_D1_Previous); Diff_EAS_D2 = str(MyEAS_D2-MyEAS_D2_Previous); Diff_EAS_D3 = str(MyEAS_D3-MyEAS_D3_Previous); Diff_EAS_D4 = str(MyEAS_D4-MyEAS_D4_Previous); Diff_EAS_T_int = (MyEAS_T-MyEAS_T_Previous)/Day; Diff_EAS_T = str(Diff_EAS_T_int); if ((MyType == "Arrêté quotidien") and (Diff_EAS_T_int > 0)): try : Requesq_SQL="INSERT INTO COMPTEUR (DATE,TYPE_RELEVE,EAS_F1, EAS_F2, EAS_F3 , EAS_F4, EAS_F5, EAS_F6 , EAS_F7 , EAS_F8 , EAS_F9 , EAS_F10 , EAS_D1 , EAS_D2 , EAS_D3 ,EAS_D4 , EAS_T) VALUES ('"+MyDate+"', '"+MyType+"', "+Diff_EAS_F1+","+Diff_EAS_F2+", "+Diff_EAS_F3+", "+Diff_EAS_F4+", "+Diff_EAS_F5+", "+Diff_EAS_F6+", "+Diff_EAS_F7+","+Diff_EAS_F8+", "+Diff_EAS_F9+", "+Diff_EAS_F10+","+Diff_EAS_D1+","+Diff_EAS_D2+","+Diff_EAS_D3+","+Diff_EAS_D4+","+Diff_EAS_T+");"; #print Requesq_SQL; cursor.execute(Requesq_SQL); except mysql.connector.Error as err: print("Something went wrong: {}".format(err)) if err.errno == errorcode.ER_BAD_TABLE_ERROR: print("Creating table COMPTEUR") else: None if (Day > 1): print ("Day > 1 :"+str(Day)) Diff_EAS_F1 = str((MyEAS_F1-MyEAS_F1_Previous)/Day); Diff_EAS_F2 = str((MyEAS_F2-MyEAS_F2_Previous)/Day); Diff_EAS_F3 = str((MyEAS_F3-MyEAS_F3_Previous)/Day); Diff_EAS_F4 = str((MyEAS_F4-MyEAS_F4_Previous)/Day); Diff_EAS_F5 = str((MyEAS_F5-MyEAS_F5_Previous)/Day); Diff_EAS_F6 = str((MyEAS_F6-MyEAS_F6_Previous)/Day); Diff_EAS_F7 = str((MyEAS_F7-MyEAS_F7_Previous)/Day); Diff_EAS_F8 = str((MyEAS_F8-MyEAS_F8_Previous)/Day); Diff_EAS_F9 = str((MyEAS_F9-MyEAS_F9_Previous)/Day); Diff_EAS_F10 = str((MyEAS_F10-MyEAS_F10_Previous)/Day); Diff_EAS_D1 = str((MyEAS_D1-MyEAS_D1_Previous)/Day); Diff_EAS_D2 = str((MyEAS_D2-MyEAS_D2_Previous)/Day); Diff_EAS_D3 = str((MyEAS_D3-MyEAS_D3_Previous)/Day); Diff_EAS_D4 = str((MyEAS_D4-MyEAS_D4_Previous)/Day); Diff_EAS_T_int = (MyEAS_T-MyEAS_T_Previous)/Day; Diff_EAS_T = str(Diff_EAS_T_int); if ((MyType == "Arrêté quotidien") and (Diff_EAS_T_int > 0)): try : Requesq_SQL="INSERT INTO COMPTEUR (DATE,TYPE_RELEVE,EAS_F1, EAS_F2, EAS_F3 , EAS_F4, EAS_F5, EAS_F6 , EAS_F7 , EAS_F8 , EAS_F9 , EAS_F10 , EAS_D1 , EAS_D2 , EAS_D3 ,EAS_D4 , EAS_T) VALUES ('"+MyDate+"', '"+MyType+"', "+Diff_EAS_F1+","+Diff_EAS_F2+", "+Diff_EAS_F3+", "+Diff_EAS_F4+", "+Diff_EAS_F5+", "+Diff_EAS_F6+", "+Diff_EAS_F7+","+Diff_EAS_F8+", "+Diff_EAS_F9+", "+Diff_EAS_F10+","+Diff_EAS_D1+","+Diff_EAS_D2+","+Diff_EAS_D3+","+Diff_EAS_D4+","+Diff_EAS_T+");"; print Requesq_SQL; cursor.execute(Requesq_SQL); except mysql.connector.Error as err: print("Something went wrong: {}".format(err)) if err.errno == errorcode.ER_BAD_TABLE_ERROR: print("Creating table COMPTEUR") else: None # Save Previous if ((MyType == "Arrêté quotidien") and (Diff_EAS_T_int >= 0)): MyDate_Previous=MyDate; MyType_Previous=MyType; MyEAS_F1_Previous=MyEAS_F1; MyEAS_F2_Previous=MyEAS_F2; MyEAS_F3_Previous=MyEAS_F3; MyEAS_F4_Previous=MyEAS_F4; MyEAS_F5_Previous=MyEAS_F5; MyEAS_F6_Previous=MyEAS_F6; MyEAS_F7_Previous=MyEAS_F7; MyEAS_F8_Previous=MyEAS_F8; MyEAS_F9_Previous=MyEAS_F9; MyEAS_F10_Previous=MyEAS_F10; MyEAS_D1_Previous=MyEAS_D1; MyEAS_D2_Previous=MyEAS_D2; MyEAS_D3_Previous=MyEAS_D3; MyEAS_D4_Previous=MyEAS_D4; MyEAS_T_Previous=MyEAS_T; cnx.commit(); cursor.close(); cnx.close(); # END

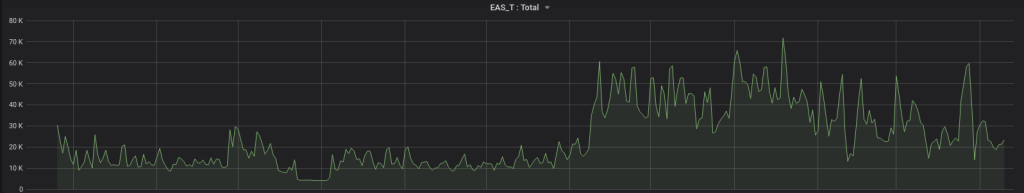

Ensuite on passe à la visualisation graphique :

SELECT

UNIX_TIMESTAMP(date) as time_sec,

EAS_T as value,

"TOTAL" as metric

FROM COMPTEUR

WHERE $__timeFilter(date)

ORDER BY date ASC

Ensuite les autres graphiques sont fonctions du forfait … pour ma part j’ai EAS D1 (Heures pleines):

Ensuite les autres graphiques sont fonctions du forfait … pour ma part j’ai EAS D1 (Heures pleines):

SELECT UNIX_TIMESTAMP(date) as time_sec, EAS_D1 as value, "Heures pleines" as metric FROM COMPTEUR WHERE $__timeFilter(date) ORDER BY date ASC

Et aussi EAS D2 (Nuit) :

SELECT UNIX_TIMESTAMP(date) as time_sec, EAS_D2 as value, "Heures creuses" as metric FROM COMPTEUR WHERE $__timeFilter(date) ORDER BY date ASC

Je vais améliorer les versions patiences …

Pour utiliser le script il faut:

Slack permet le téléchargement d’un fichier CSV ( access_logs.csv ), dont les données sont les suivantes :

Petit rappel sur l’ajout d’une database et d’un utilisateur :

$ sudo mysql -u root MariaDB [(none)]> create database SLACK; MariaDB [(none)]> CREATE USER 'slack'@'localhost' IDENTIFIED BY 'slack'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON SLACK.* TO 'slack'@'localhost'; MariaDB [(none)]> FLUSH PRIVILEGES; MariaDB [(none)]> \quit Bye

Petit rappel aussi en python pour télécharger une classe non disponible :

$ sudo pip install python-dateutil

Le source du programme : ( Les sources sont disponibles ici : https://github.com/farias06/Grafana/blob/master/Slack_CSV_insert.py )

#! /usr/bin/env python3

# ~*~ utf-8 ~*~

import csv

from datetime import datetime

from dateutil.parser import parse

import mysql.connector

from mysql.connector import errorcode

from mysql.connector import (connection)

cnx = connection.MySQLConnection(user='slack', password='slack',

host='127.0.0.1',

database='SLACK')

cursor = cnx.cursor();

now = datetime.now().date();

#cursor.execute("DROP TABLE SLACK;");

#cursor.execute("CREATE TABLE SLACK (DATE datetime, DATE_LAST datetime, USER_AGENT varchar(50),USER_AGENT_FULL varchar(256), IP varchar(26), NUMBER int);");

cursor.execute("DELETE FROM SLACK");

cnx.commit();

with open('access_logs.csv', 'r') as csvfile:

reader = csv.reader(csvfile, quotechar='"')

for row in reader:

MyDate=row[0];

MyDate = MyDate.rsplit('(',1)[0];

if (MyDate == "Date Accessed"):

print("No");

else:

Dt = parse(MyDate)

MyUser=row[1];

MyUser=MyUser.replace("'", " ")

MyUserFull=row[2];

MyUserFull=MyUserFull.replace("'", " ")

MyIP=row[3];

MyNumber=row[4];

MyDateLast=row[5];

MyDateLast = MyDateLast.rsplit('(',1)[0];

DtLast = parse(MyDateLast)

try :

SQLREQUEST = "INSERT INTO SLACK (DATE, USER_AGENT, USER_AGENT_FULL, IP, DATE_LAST, NUMBER) VALUES ('"+str(Dt.date())+" "+str(Dt.time())+"', '"+MyUser+"', '"+MyUserFull+"','"+MyIP+"', '"+str(DtLast.date())+" "+str(DtLast.time())+"', "+MyNumber+" );";

cursor.execute(SQLREQUEST);

except mysql.connector.Error as err:

print("Something went wrong: {}".format(err))

if err.errno == errorcode.ER_BAD_TABLE_ERROR:

print("Creating table SLACK")

else:

None

cnx.commit();

cursor.close();

cnx.close();

# END

Pour lancer le programme :

$ python Slack_CSV_insert.py

Ensuite pour voir les données il y a plusieurs requetes possibles pour le metric :

Par IP :

SELECT

UNIX_TIMESTAMP(date) as time_sec,

SUM(number) as value,

ip as metric

FROM SLACK

WHERE $__timeFilter(date)

GROUP BY day(date),month(date),year(date)

ORDER BY date ASC

Par User Agent :

SELECT

UNIX_TIMESTAMP(date) as time_sec,

SUM(number) as value,

user_agent as metric

FROM SLACK

WHERE $__timeFilter(date)

GROUP BY day(date),month(date),year(date)

ORDER BY date ASC

Par User Agent Full :

SELECT

UNIX_TIMESTAMP(date) as time_sec,

SUM(number) as value,

user_agent_full as metric

FROM SLACK

WHERE $__timeFilter(date)

GROUP BY day(date),month(date),year(date)

ORDER BY date ASC

J’ai noté un bug, j’utilise la version Desktop sous Linux et je n’ai pas de « Application de Bureau Linux » .